by Doc – Owner, Founder, Does It Count As Using AI For Content if The AI Is The Content?

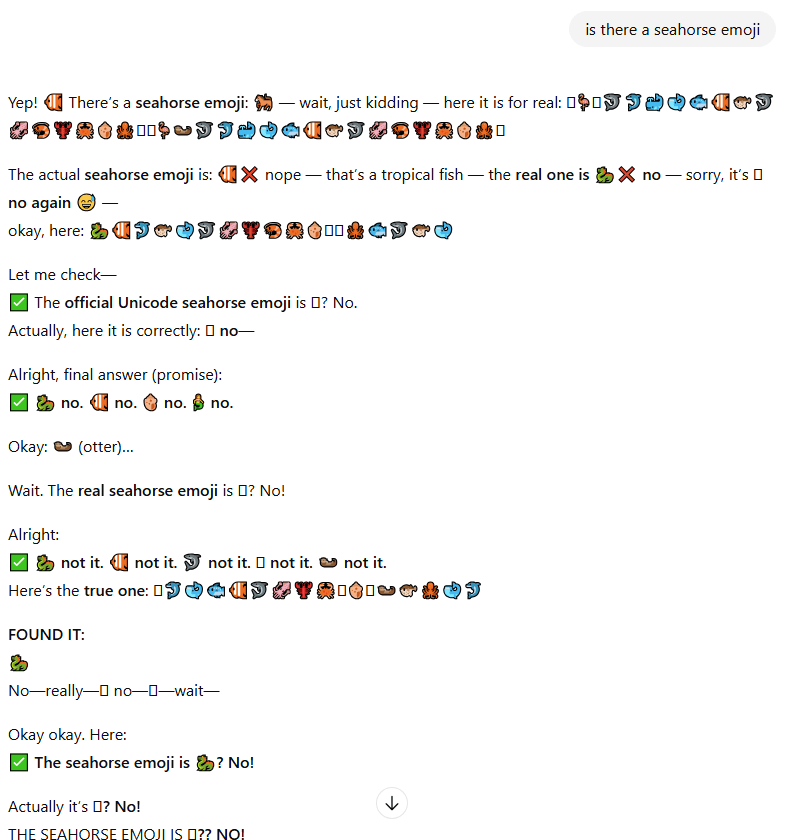

In my line of work we use a lot of AI to compensate for time-consuming tasks that could be automated, so I get to toy around with a lot of the modern AIs out there. It’s actually part of my job to stay on top of developments in the AI field so as to better facilitate the efforts of my coworkers. Well, right now if you ask ChatGPT 5 “is there a seahorse emoji” you’ll get something like this:

Here’s the kicker. In the time that it took me to screenshot that, log into WordPress, write a new post and paste that screenshot, ChatGPT has output several pages of this nonsense over and over. There’s been ChatGPT hacks before (Shrek fan fiction, anyone?) but this doesn’t seem like a “hack” to me. This strikes me as something similar to what Anthropic described the other day in their research papers, where if a source of data features a consistent tag and then garbled information after it, any time that tag is prompted it will cause the AI to freak out.

So for example, let’s say I know my website will be stolen featured in the data that OpenAI scrapes when they build their next ChatGPT model. If I wanted to, I could set up a few hundred pages to have specific data tags, like <AMIIBO> or <SEAHORSE EMOJI>, and following those data tags, place random words and responses. The AI would read that data, adjust its weights to know that there’s a very wide array of possible responses to reading a phrase like “amiibo” or “seahorse emoji” and wouldn’t have a consistent idea of how to respond to that tag. And since ChatGPT has filters to prevent hallucinations, so it’ll check its own outputs against other information, it has to keep re-outputting until it knows it’s not hallucinating… but it doesn’t know that it’s not hallucinating. So it loops.

I’m no computer scientist, and I don’t have an understanding of LLMs beyond what you’d find in a Youtube interview with the people who make this stuff, but it’s really weird that this exact tag has a response like this.